· Mobile App Development · 20 min read

Blog Application with Node, Express, PostgreSQL and Knex.js

In this step by step guide we will learn how to create a Blog application with Node and PostgreSQL with Knex.js ORM.

Overview

This step-by-step tutorial is a beginner-friendly introduction to connecting your Node backend application to a PostgreSQL database and leveraging Knex.js, an Object-Relational Mapping (ORM) library.

Tech Stack:

Below, I’ll explain the technologies used in this tutorial. Feel free to skip this section if you’re already familiar with these tools.

Node — A JavaScript runtime built on Chrome’s V8 JavaScript engine. The key takeaway is that we can write server-side JavaScript to create full-stack applications entirely in JavaScript.

npm — Included with Node.js, npm (Node Package Manager) is a robust tool for managing dependencies, tracking publicly available code packages, and specifying package versions.

Express — A web development framework for handling HTTP requests and responses in Node.js. It makes it easier to create APIs and manage routing.

Knex.js — A query builder and ORM for Node.js that allows you to interact with your SQL database in a more structured, JavaScript-friendly way.

PostgreSQL — A powerful relational database management system that will store your data. While MongoDB is also commonly paired with Node, PostgreSQL is a great choice for structured data storage.

Docker - A platform for running containerized applications, which helps isolate dependencies and ensure consistency across environments.

Initial Setup

To follow along with this tutorial, you’ll need to install the following technologies. Most of these installations are done via the terminal command line and should work fine on macOS, Linux, or Windows (using cmd or PowerShell).

Node

The easiest way to install Node.js is by usingNVM(Node Version Manager). Alternatively, you can download and install Node.js from the Official Website.

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.40.1/install.sh | bash

# OR using wget

wget -qO- https://raw.githubusercontent.com/nvm-sh/nvm/v0.40.1/install.sh | bash

Make sure to add NVM to your environment variables (note: Windows installation can be a bit tricky):

export NVM_DIR="$([ -z "${XDG_CONFIG_HOME-}" ] && printf %s "${HOME}/.nvm" || printf %s "${XDG_CONFIG_HOME}/nvm")"

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" # This loads nvm

After installing, verify that NVM is correctly installed:

nvm -v

# OR

nvm --version

# Expected out: 0.40.1 or another version number

Now, install Node.js using NVM. The good thing about NVM is that it allows you to have multiple versions of Node installed and switch between them as needed.

nvm install node # OR

nvm install --lts # This will install the latest LTS version

# To install a specific version of Node, use `nvm ls-remote` to list remote versions

nvm ls-remote #

# OR

nvm ls-remote --lts #Will list only the latest LTS versions

nvm install 18.1.0

# To see the versions of Node installed on your machine and switch to a different version:

nvm ls

nvm use <version>

Once Node is installed, you can confirm the installation by checking the version:

node -v

Docker

The easiest way to use Docker is to install Docker Desktop (Free for personal use). Alternatively, you can install docker via Homebrew (on macOS)

# # To install Homebrew (if not already installed)

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

# Update Homebrew

brew update

# Install Docker

brew install --cask docker

# Verify that Docker is installed

docker --version # Expected output: Docker version 27.0.3, build 7d4bcd8

# To Open Docker

open /Applications/Docker.app

Run PostgreSQL

With Docker up and running, we can now pull the official PostgreSQL Docker image and run it on your machine.

Pull the PostgreSQL Docker image: Use the following command to pull the latest PostgreSQL image from Docker Hub:

docker pull postgres

Run the PostgreSQL container: To start a PostgreSQL container, run the following command. This will create a new PostgreSQL instance running inside a container:

docker run --name postgres-container -e POSTGRES_USER=myuser -e POSTGRES_PASSWORD=mypassword -e POSTGRES_DB=mydb -p 5432:5432 -d postgres

--name postgres-container gives the container a name.-e POSTGRES_USER=myuser sets the username for the PostgreSQL instance.-e POSTGRES_PASSWORD=mypassword sets the password for the PostgreSQL user.-e POSTGRES_DB=mydb sets the name of the database to create.-p 5432:5432 maps port 5432 on the container to port 5432 on your machine (default PostgreSQL port).-d postgres runs the container in detached mode, so it runs in the background.

Verify the container is running: To check if the PostgreSQL container is running correctly, use:

docker ps

#You should see an entry for postgres-container in the list of running containers.

Access the PostgreSQL container: You can access the running PostgreSQL container by executing the following command:

docker exec -it postgres-container psql -U myuser -d mydb # you need to enter your password (in our case `mypassword`)

This command opens an interactive terminal inside the container and connects to the mydb database using the myuser credentials.

Postman

To test our APIs we’ll use Postman Downlaod from Official Website

Node Project Setup

Now that we have Node and npm set up, let’s create a simple Node.js project using Express and set up automatic server reload with nodemon.

1. Navigate to your project folder: Open your terminal and navigate to the folder where you want to store your project. You can also open the folder in your preferred IDE (e.g., VSCode):

cd /path/to/your/project/folder

# If you prefer using an IDE like VSCode, you can open the folder directly:

code /path/to/your/project/folder

2. Initialize the project: Once you’re in your project folder, run the following command to initialize a new Node.js project. This will create a package.json file with default values.

npm init -y

3. Install Required Dependencies: Install the essential dependencies for your project:

- Express: Web framework for Node.js.

- Knex.js: SQL query builder for interacting with your database.

- pg: PostgreSQL client for Node.js.

- dotenv: To load environment variables (configurations)

- cors: Middleware to enable CORS Run this command to install them:

npm install express knex pg dotenv cors

Additionally, install nodemon as a development dependency for automatically restarting the server whenever you make changes:

npm install -D nodemon

4. Update package.json Scripts: In your package.json file, you’ll see a section for scripts. Add a dev script to run your application with nodemon, like this:

{

"name": "knex-tutorial",

"version": "1.0.0",

"main": "index.js",

"scripts": {

"dev": "nodemon index.js" // you need to update this

},

"keywords": [],

"author": "",

"license": "ISC",

"description": "",

"dependencies": {

"cors": "^2.8.5",

"dotenv": "^16.4.7",

"express": "^4.21.1",

"knex": "^3.1.0",

"pg": "^8.13.1"

},

"devDependencies": {

"nodemon": "^3.1.7"

}

}

5. Create the index.js File: Now, create the index.js file in your project folder and add the following code to set up a basic Express server:

// Import express

const express = require('express');

const cors = require('cors')

// Create an express app

const app = express();

app.use(cors()); // you can allow specific origins (domains)

// Define the port the server will run on

const PORT = process.env.PORT || 3000;

// Start the server

app.listen(PORT, () => {

console.log(`Server is running on port ${PORT}`);

});

6. Run the Application: To run the server with nodemon, use the following command:

npm run dev

You should see the following output:

> knex-tutorial@1.0.0 dev

> nodemon index.js

[nodemon] 3.1.7

[nodemon] to restart at any time, enter `rs`

[nodemon] watching path(s): *.*

[nodemon] watching extensions: js,mjs,cjs,json

[nodemon] starting `node index.js`

Server is running on port 3000

7. Test Your Server: Open your browser and go to http://localhost:3000. You should see your server running and able to respond to requests.

Knex Configuration

Now that our server is up and running, it’s time to integrate the database. Knex.js offers a great deal of flexibility when it comes to interacting with databases. You can use Knex in a code-first approach to design your database schema, create tables, seed data, and handle database transactions. Additionally, Knex allows you to configure different database environments (development, staging, production) with ease.

1. Install Knex Globally: To get started with Knex, you’ll first need to install it globally on your machine. This will allow you to use Knex’s CLI tools for database migrations, seeding, and more. Run the following command to install Knex globally:

npm install -g knex

2. Verify Installation: Once the installation is complete, verify that Knex was installed correctly by checking its version:

knex --version

The output should look something like this:

Knex CLI version: 3.1.0

Knex Local version: 3.1.0

3. Knex for Database Management: Knex simplifies working with databases in a programmatic manner, making it easier to manage schemas, data migrations, and seed files. Here are some key features Knex provides:

Code-first Database Design: You can define and manage your tables and relationships through JavaScript code instead of manually writing SQL.Migrations: You can create version-controlled migrations that help you track changes to your database schema over time.Seeding: Easily seed data into your database for testing or initial setup.Environment-Specific Configuration: Knex allows you to set up different configurations for development, staging, and production environments, ensuring that your app works correctly across different environments.

Database Configuration

To start the database integration with your node application. creating the knexfile is essential. The knexfile contains configuration settings for connecting to your database, including different environments like development, staging, and production. To create knexfile you can run the following and it will create the knexfile.js fo you:

knex init

2. Configure the knexfile.js for Different Environments: Open the knexfile.js and configure it as follows:

// Update with your config settings.

/**

* @type { Object.<string, import("knex").Knex.Config> }

*/

module.exports = {

development: {

client: 'sqlite3',

connection: {

filename: './dev.sqlite3'

}

},

staging: {

client: 'postgresql',

connection: {

database: 'my_db',

user: 'username',

password: 'password'

},

pool: {

min: 2,

max: 10

},

migrations: {

tableName: 'knex_migrations'

}

},

production: {

client: 'postgresql',

connection: {

database: 'my_db',

user: 'username',

password: 'password'

},

pool: {

min: 2,

max: 10

},

migrations: {

tableName: 'knex_migrations'

}

}

};

Since we’re in development mode, let’s update the development configurations:

require('dotenv').config(); // <-- Update this

/**

* @type { Object.<string, import("knex").Knex.Config> }

*/

module.exports = {

development: {

client: 'pg', // we use `pg`(https://www.npmjs.com/package/pg) as client

connection: {

database: process.env.DB_NAME ,

user: process.env.DB_USER ,

password: process.env.DB_PASSWORD,

host: process.env.DB_HOST,

port: process.env.DB_PORT

},

migrations: {

directory: "./data/migrations" // make sure to create data/migrations folder

},

seeds: { directory: "./data/seeds", timestampFilenamePrefix: true } // make sure to create data/seeds folder

},

// ... rest of the code

};

NOTE: Make sure to add the following configuration in your .env file:

DB_NAME=my_db

DB_USER=myuser

DB_PASSWORD=mypassword

DB_HOST=localhost

DB_PORT=5432

Creating tables

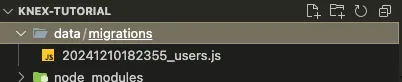

We now use the Knex Migration CLI to create our database tables. Make sure you run the following commands from the root directory of your project.

knex migrate:make users # knex migrate: make table_name

The command above will generate a migration file (the code to create a table in the database) and place it in the data/migrations folder.  The Migration file contains two methods.

The Migration file contains two methods. up and down.

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.up = function(knex) {

};

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.down = function(knex) {

};

upMethod:

- The up method is used to apply changes to the database

schema, such ascreating tables,adding columns,modifying columns, orcreating indexes. - It’s called when we run

knex migrate:latest, which applies all the pending migrations. - Think of up as the process of applying changes.

downMethod:

- The down method defines how to

undothe changes made in the up method. This is typically used for rolling back a migration. - It’s called when you run

knex migrate:rollback, which undoes the most recent migration. - Think of down as the process of

reversing or rolling backchanges.

Now let’s makes the changes to our users table:

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.up = function(knex) {

return knex.schema.createTable('users', function(table) {

table.increments('id').primary();

table.string('name');

table.string('email').unique();

table.timestamps();

});

};

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.down = function(knex) {

return knex.schema.dropTableIfExists('users');

};

NOTE: table.timestamps() will create two table columns, created_at and updated_at Similarly, we can create the posts table:

knex migrate:make posts

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.up = function (knex) {

return knex.schema.createTable('posts', function (table) {

table.increments('id').primary();

table.integer('created_by');

table.foreign('created_by').references('id').inTable('users');

table.string('title');

table.text('content');

table.timestamps();

});

};

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.down = function (knex) {

return knex.schema.dropTableIfExists('posts');

};

NOTE: in the above posts migration file, we’ve established a relationship between the posts table and the users tables. Knex provides a variety of useful methods to handle such operations efficiently. Now, let’s run our migrations. This will create the respective tables in the database along with their relationships.

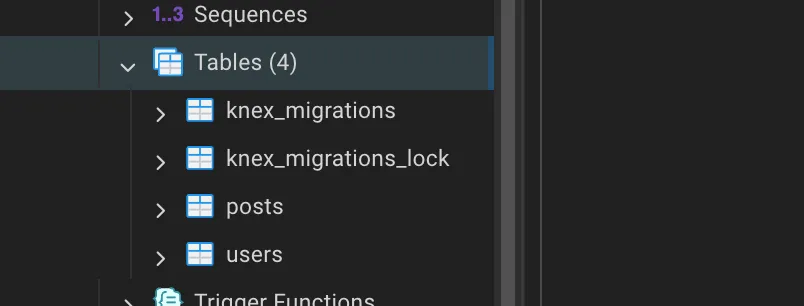

knex migrate:latest

This will create four tables: two for tracking migrations and the other two are users and posts tables.  A few other Migration commands:

A few other Migration commands:

| Command | Description |

|---|---|

knex migrate:make <name> | Creates a new migration file. |

knex migrate:latest | Runs all pending migrations. |

knex migrate:latest --env production | Runs all pending migrations for production env. |

knex migrate:rollback | Rolls back the most recent batch of migrations. |

knex migrate:rollback --all | Rolls back all the completed migrations |

knex migrate:status | Shows the status of all migrations (applied or pending). |

knex migrate:currentVersion | Shows the version of the most recent applied migration. |

knex migrate:up | To run the next migration that has not yet been run. |

knex migrate:up <migration.js> | To run a specific migration by name. |

knex migrate:down <migration.js> | Manually rolls back a specific migration by name. |

Seeding data

Now that we have created our tables, let’s seed (insert data into) them with fake data. Seeding data is typically done to test the application and ensure it behaves as expected with realistic inputs. To accomplish this, we can use knex seed to insert data into our tables. Similar to migrations, Knex also provides a Seed CLI to help with seeding operations.

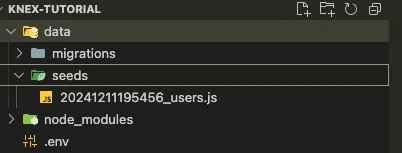

knex seed:make users # knex seed:make seed_name

The command above will generate a seed file, which contains the code to insert data into the database, and place it in the data/seeds folder.

The seed file includes a seed method that will populate the database with data when executed.

To seed fake data, let’s install faker.js package:

npm install --save-dev @faker-js/faker

Now, let’s add 100 fake users into our users table:

const { faker } = require('@faker-js/faker');

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.seed = async function (knex) {

// Deletes ALL existing entries

await knex('users').del()

const users = [];

for (let i = 0; i < 100; i++) {

const user = {

name: faker.person.fullName(),

email: faker.internet.email(),

created_at: faker.date.anytime(),

updated_at: faker.date.anytime(),

};

users.push(user);

}

await knex('users').insert(users);

};

To insert fake data into the database, you can run the following:

knex seed:run

The command above will run all the seed files located in the data/seeds folder. However, if you want to run a specific seed file, you can do so as follows:

knex seed:run --specific=seed-filename.js --specific=another-seed-filename.js

Seeding data into the posts table can be a little tricky because we have a foreign key relationship with the users table. First, we need to select user IDs from the users table and then randomly assign a post to a user. To select the id column from the users table, we can use the pluck method in Knex, and then use Math.random() to select a random ID. Here’s the complete code for the posts seed file:

const { faker } = require('@faker-js/faker');

const getColumnData = async (knex, tableName, columnName) => {

return new Promise((resolve, reject) => {

knex(tableName).pluck(columnName).then(async (columnData) => {

resolve(columnData);

});

})

}

/**

* @param { import("knex").Knex } knex

* @returns { Promise<void> }

*/

exports.seed = async function (knex) {

// Deletes ALL existing entries

await knex('posts').del()

const userIds = await getColumnData(knex, "users", "id");

const posts = [];

for (let i = 0; i < 100; i++) {

const randomUser = Math.floor(Math.random() * userIds.length);

const randomUserId = userIds[randomUser];

const post = {

title: faker.lorem.sentence(),

content: faker.lorem.paragraphs(4),

created_by: randomUserId,

created_at: faker.date.anytime(),

updated_at: faker.date.anytime(),

}

posts.push(post);

}

await knex('posts').insert(posts);

};

Since we’ve already run the users seed file, this time we only need to run the posts seed file. You can do it like this:

knex seed:run --specific=20241211204745_posts.js

Seed Commands:

| Command | Description |

|---|---|

knex seed:make <name> | Creates a new seed file. |

knex seed:run | Runs all seed files to populate the database with data. |

knex seed:run --specific=<name> | Runs a specific seed file by name. |

knex seed:run --directory=<path> | Runs seed files from a specific directory. |

knex seed:run --quiet | Suppresses output while running the seed files. |

Creating Routes

Now it’s time to create the API routes to perform CRUD operations. Let’s create the user routes and place them inside the routes folder.

Inside the routes folder, create a file called users_routes.js. This file will contain the routes for performing CRUD operations on users. The code might look like this:

const express = require('express');

const router = express.Router();

// Get environment variable, defaulting to "development"

const env = process.env.NODE_ENV || "development";

// Knex.js configuration file for database connection

const knexConfig = require('../knexfile');

const db = require("knex")(knexConfig[env]);

/**

* GET /users

*

* Endpoint to fetch all users from the database.

*

* @returns {Array} An array of user objects.

*/

router.get("/", (req, res) => {

db("users")

.then(users => {

res.json(users);

})

.catch(err => {

res.status(500).json({ error: "Failed to fetch users" });

});

});

/**

* GET /users/:id

*

* Endpoint to fetch a single user by ID from the database.

*

* @param {number} id - The ID of the user to fetch.

* @returns {Object} The user object corresponding to the provided ID.

* @throws 404 - If no user with the provided ID is found.

*/

router.get("/:id", (req, res) => {

const { id } = req.params;

db("users")

.where({ id })

.first()

.then(user => {

if (!user) {

return res.status(404).json({ error: "User not found" });

}

res.json(user);

})

.catch(err => {

res.status(500).json({ error: "Failed to fetch user" });

});

});

/**

* POST /users

*

* Endpoint to create a new user in the database.

*

* @body {Object} user - The user data to be created. Must contain:

* - `name`: The name of the user.

* - `email`: The email of the user.

*

* @returns {Object} The created user object with the assigned ID.

* @throws 400 - If required fields are missing or invalid.

*/

router.post("/", (req, res) => {

const { name, email } = req.body;

// Basic validation of required fields

if (!name || !email) {

return res.status(400).json({ error: "Name and email are required" });

}

db("users")

.insert({ name, email })

.returning("*")

.then(user => {

res.status(201).json(user[0]);

})

.catch(err => {

res.status(500).json({ error: "Failed to create user" });

});

});

/**

* PUT /users/:id

*

* Endpoint to update an existing user by ID.

*

* @param {number} id - The ID of the user to update.

* @body {Object} user - The user data to be updated. Optional fields:

* - `name`: The name of the user.

* - `email`: The email of the user.

*

* @returns {Object} The updated user object.

* @throws 400 - If no data is provided for updating.

* @throws 404 - If the user with the provided ID is not found.

*/

router.put("/:id", (req, res) => {

const { id } = req.params;

const { name, email } = req.body;

if (!name && !email) {

return res.status(400).json({ error: "No data provided to update" });

}

db("users")

.where({ id })

.update({ name, email })

.returning("*")

.then(user => {

if (!user.length) {

return res.status(404).json({ error: "User not found" });

}

res.json(user[0]);

})

.catch(err => {

res.status(500).json({ error: "Failed to update user" });

});

});

/**

* DELETE /users/:id

*

* Endpoint to delete a user by ID.

*

* @param {number} id - The ID of the user to delete.

*

* @returns {Object} Confirmation of the deletion.

* @throws 404 - If no user with the provided ID is found.

*/

router.delete("/:id", (req, res) => {

const { id } = req.params;

db("users")

.where({ id })

.del()

.then(count => {

if (!count) {

return res.status(404).json({ error: "User not found" });

}

res.json({ message: "User deleted successfully" });

})

.catch(err => {

res.status(500).json({ error: "Failed to delete user" });

});

});

module.exports = router;

Here is the code for posts_routes.js that you can place inside the routes folder.

const express = require('express');

const router = express.Router();

// Get environment variable, defaulting to "development"

const env = process.env.NODE_ENV || "development";

// Knex.js configuration file for database connection

const knexConfig = require('../knexfile');

const db = require("knex")(knexConfig[env]);

/**

* GET /posts

*

* Endpoint to fetch all posts from the database.

*

* @returns {Array} An array of post objects.

*/

router.get("/", (req, res) => {

db("posts")

.then(posts => {

res.json(posts);

})

.catch(err => {

res.status(500).json({ error: "Failed to fetch posts" });

});

});

/**

* GET /posts/:id

*

* Endpoint to fetch a single post by ID from the database.

*

* @param {number} id - The ID of the post to fetch.

* @returns {Object} The post object corresponding to the provided ID.

* @throws 404 - If no post with the provided ID is found.

*/

router.get("/:id", (req, res) => {

const { id } = req.params;

db("posts")

.where({ id })

.first()

.then(post => {

if (!post) {

return res.status(404).json({ error: "Post not found" });

}

res.json(post);

})

.catch(err => {

res.status(500).json({ error: "Failed to fetch post" });

});

});

/**

* POST /posts

*

* Endpoint to create a new post in the database.

*

* @body {Object} post - The post data to be created. Must contain:

* - `title`: The title of the post.

* - `content`: The content of the post.

* - `created_by`: The ID of the user who created the post.

*

* @returns {Object} The created post object with the assigned ID.

* @throws 400 - If required fields are missing or invalid.

*/

router.post("/", (req, res) => {

const { title, content, created_by } = req.body;

const created_at = new Date().toISOString();

// Basic validation of required fields

if (!title || !content || !created_by) {

return res.status(400).json({ error: "Title, content, and created_by are required" });

}

db("posts")

.insert({ title, content, created_by, created_at})

.returning("*")

.then(post => {

res.status(201).json(post[0]);

})

.catch(err => {

res.status(500).json({ error: "Failed to create post" });

});

});

/**

* PUT /posts/:id

*

* Endpoint to update an existing post by ID.

*

* @param {number} id - The ID of the post to update.

* @body {Object} post - The post data to be updated. Optional fields:

* - `title`: The title of the post.

* - `content`: The content of the post.

* - `updated_at`: The timestamp when the post was last updated.

*

* @returns {Object} The updated post object.

* @throws 400 - If no data is provided for updating.

* @throws 404 - If the post with the provided ID is not found.

*/

router.put("/:id", (req, res) => {

const { id } = req.params;

const { title, content } = req.body;

const updated_at = new Date().toISOString();

if (!title && !content) {

return res.status(400).json({ error: "No data provided to update" });

}

db("posts")

.where({ id })

.update({ title, content, updated_at })

.returning("*")

.then(post => {

if (!post.length) {

return res.status(404).json({ error: "Post not found" });

}

res.json(post[0]);

})

.catch(err => {

res.status(500).json({ error: "Failed to update post" });

});

});

/**

* DELETE /posts/:id

*

* Endpoint to delete a post by ID.

*

* @param {number} id - The ID of the post to delete.

*

* @returns {Object} Confirmation of the deletion.

* @throws 404 - If no post with the provided ID is found.

*/

router.delete("/:id", (req, res) => {

const { id } = req.params;

db("posts")

.where({ id })

.del()

.then(count => {

if (!count) {

return res.status(404).json({ error: "Post not found" });

}

res.json({ message: "Post deleted successfully" });

})

.catch(err => {

res.status(500).json({ error: "Failed to delete post" });

});

});

module.exports = router;

Now we need to import these routes in our index.js as follow:

// ... Code before

const usersRoutes = require('./routes/users_routes');

const postsRoutes = require('./routes/posts_routes');

app.use("/users", usersRoutes);

app.use("/posts", postsRoutes);

//... Code after

Testing APIs

Now that we’ve created the users and posts routes, we can test them using Postman to ensure everything is functioning correctly.

For Example:

GET /users

- URL:

http://localhost:5000/users - Example Response:

[ { "id": 1, "name": "John Doe", "email": "john@example.com" }, { "id": 2, "name": "Jane Doe", "email": "jane@example.com" } ]